Python & Basic AWS S3 Operations: Upload, Download, and Delete Files

Efficient File Management in Amazon S3 using Boto3 Library for Python

In today's world, where digital data plays a pivotal role in our daily lives, efficient and secure file management is essential. Cloud storage solutions, such as Amazon S3 (Simple Storage Service), have become integral to handling vast amounts of data. This Python script, leveraging the Boto3 library, empowers users to seamlessly interact with Amazon S3, showcasing fundamental operations like file uploads, downloads, and deletions. As we delve into the intricacies of this script, we unveil a practical guide for harnessing the power of AWS S3 for streamlined file handling in your projects. To learn more about AWS S3 and other AWS offerings, check this

What is AWS S3

Amazon S3, also known as Simple Storage Service, is an object storage service provided by Amazon Web Services (AWS). It is a popular storage solution that allows users to easily store and retrieve any amount of data from anywhere on the web. With a simple web interface and reliable infrastructure, developers can host and manage their data with ease. Amazon S3 is flexible, cost-effective, and provides high durability, making it a go-to choice for various applications. This service has become a cornerstone for businesses and developers who need to store and retrieve data seamlessly, including static website content, application backups, or large datasets for analytics.

What are key features of S3

Scalability: S3 is designed to scale effortlessly, accommodating any amount of data, from small files to massive datasets, without requiring changes to the application architecture.

Durability and Reliability: S3 is engineered to provide 99.999999999% (11 nines) durability of objects over a given year, making it highly reliable for storing critical data.

Data Accessibility: S3 allows users to access their stored data from anywhere on the web, making it a convenient and accessible solution for global applications.

Data Lifecycle Management: Users can define lifecycle policies to automatically transition objects between storage classes or delete them when they are no longer needed, optimizing costs.

Versioning: S3 supports versioning, enabling users to preserve, retrieve, and restore every version of every object stored in a bucket, providing protection against unintended overwrites or deletions.

Security and Access Control: S3 offers fine-grained access controls, allowing users to manage who can access their data. It integrates with AWS Identity and Access Management (IAM) for secure access management.

Data Encryption: S3 supports both server-side and client-side encryption to protect data at rest and in transit, ensuring the security and privacy of stored information.

Event Notifications: Users can configure event notifications for their S3 buckets, enabling automated responses to object creation, deletion, and other events.

Multipart Upload: For large files, S3 supports multipart upload, allowing users to upload parts of an object in parallel and then combine them into a single object.

Versioned and Static Website Hosting: S3 can be used to host static websites directly, providing a cost-effective and scalable solution for web hosting.

Data Transfer Acceleration: S3 Transfer Acceleration uses Amazon CloudFront's globally distributed edge locations to accelerate uploads to and downloads from S3.

Let's Begin

Step 1 - Setup AWS S3

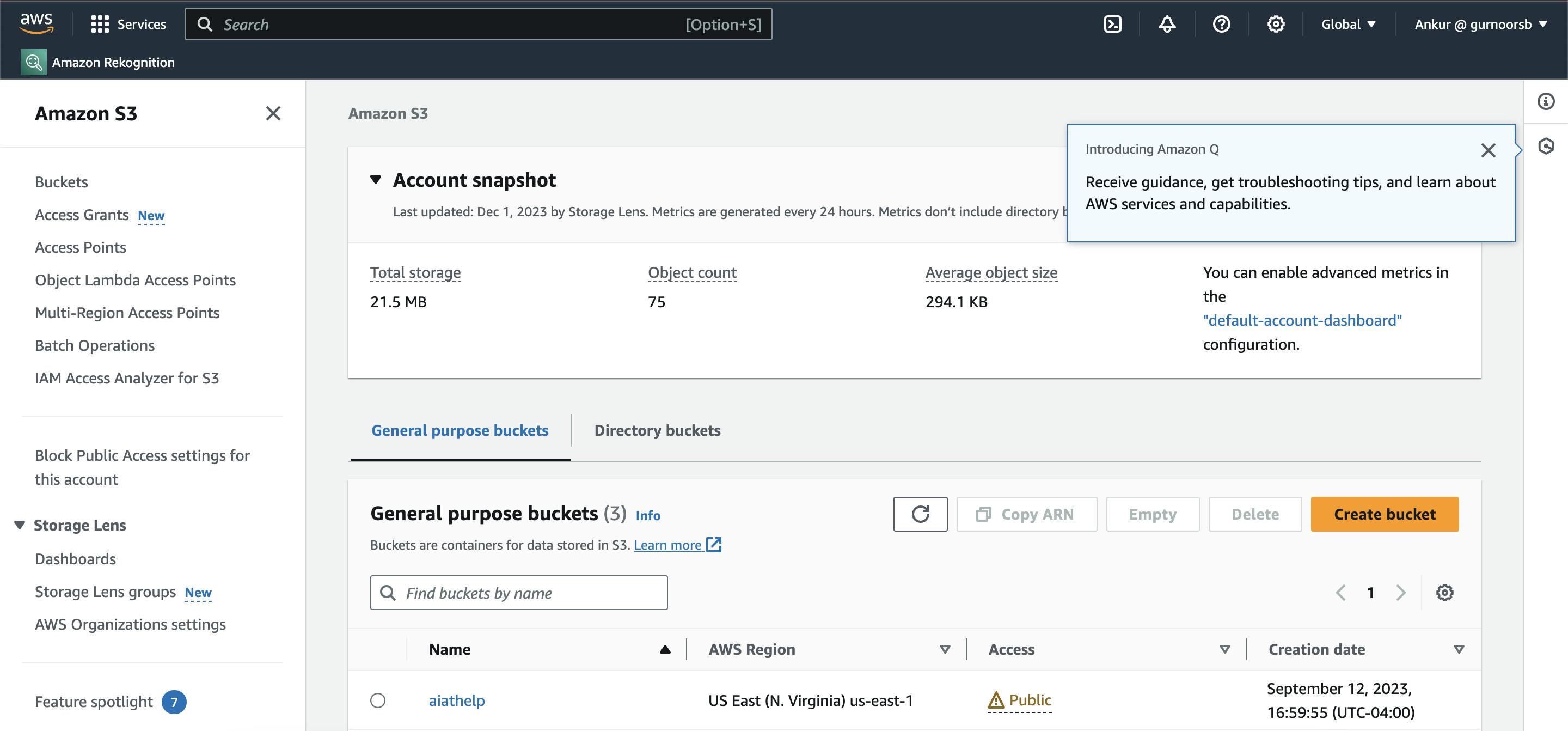

Login to AWS Console and select your Region

Move on to AWS S3 section

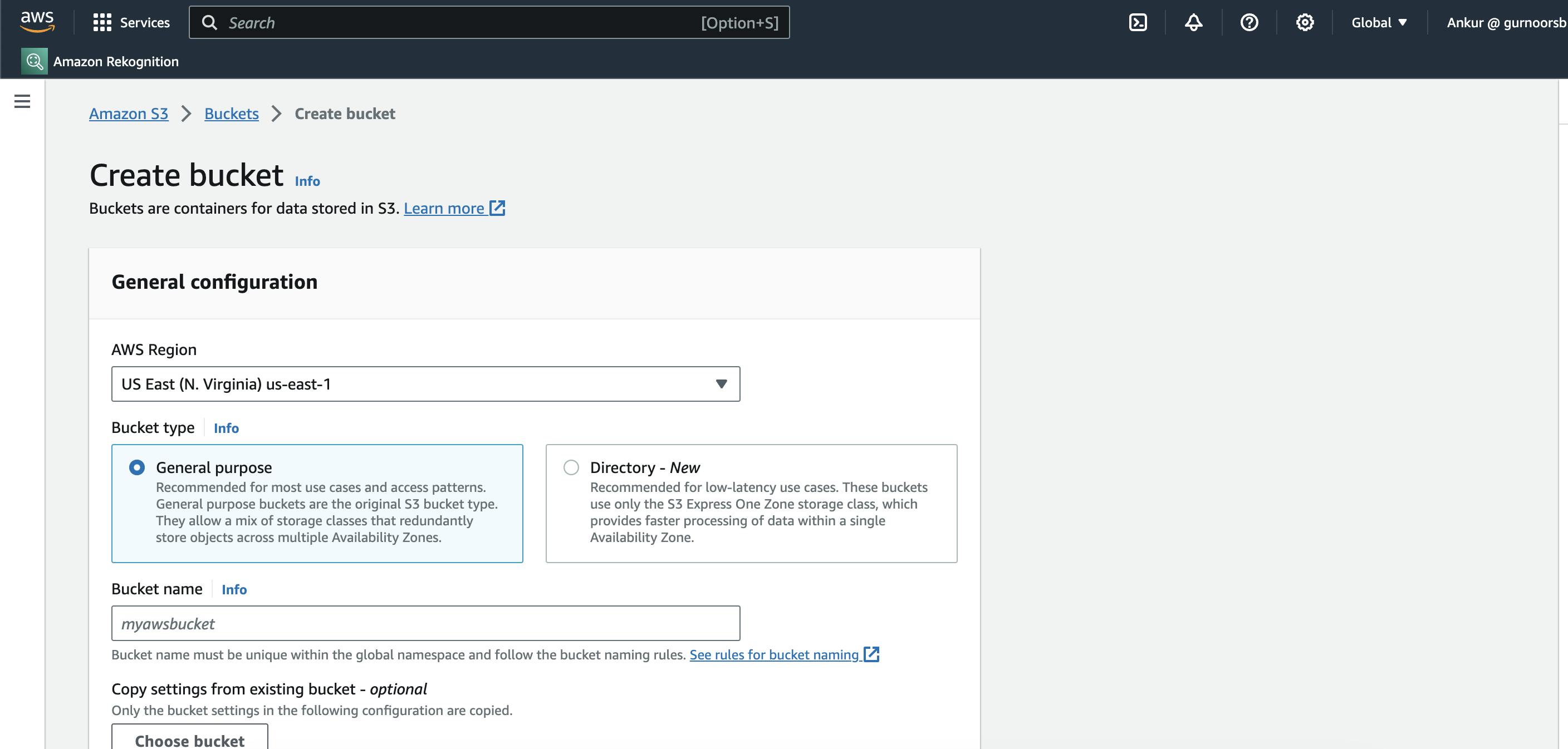

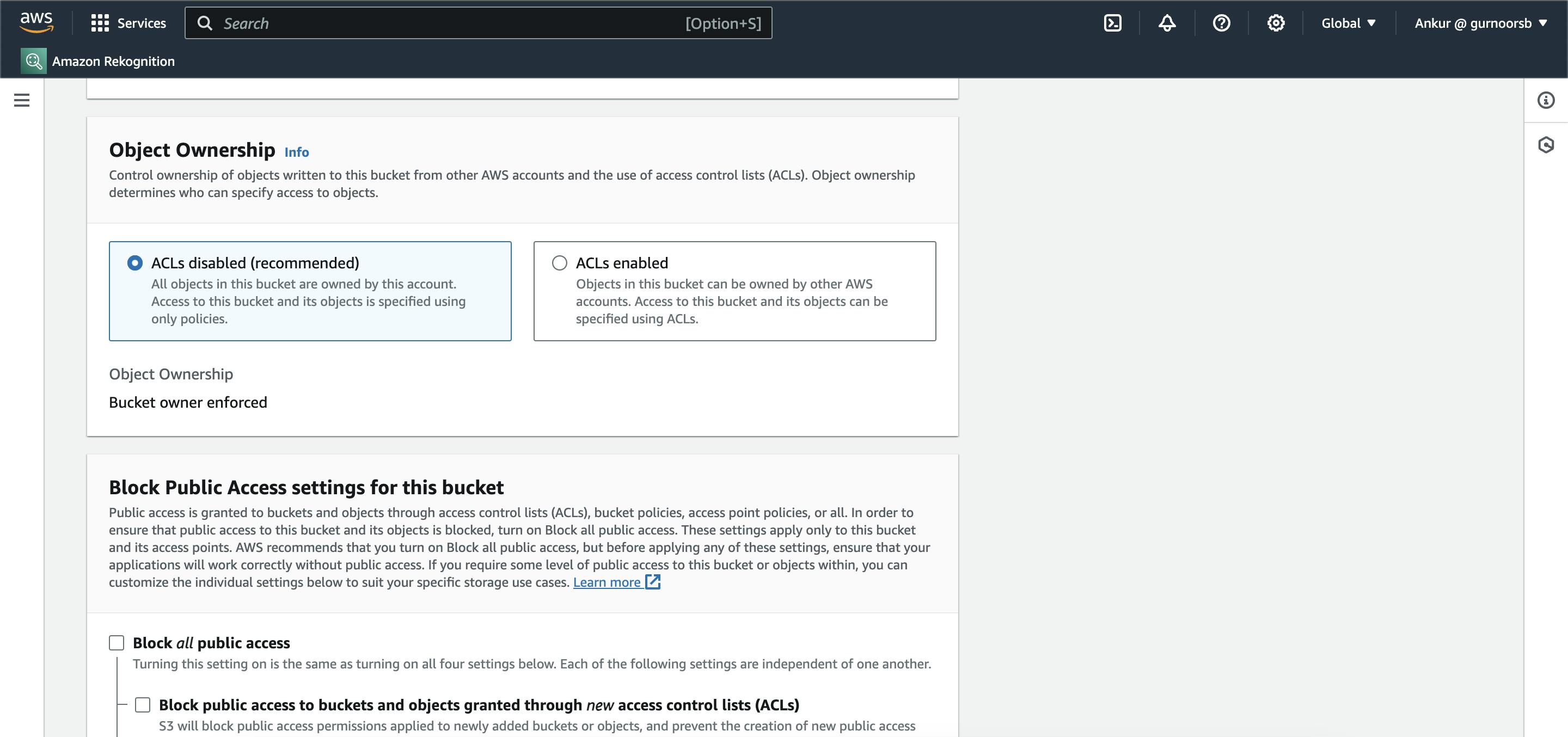

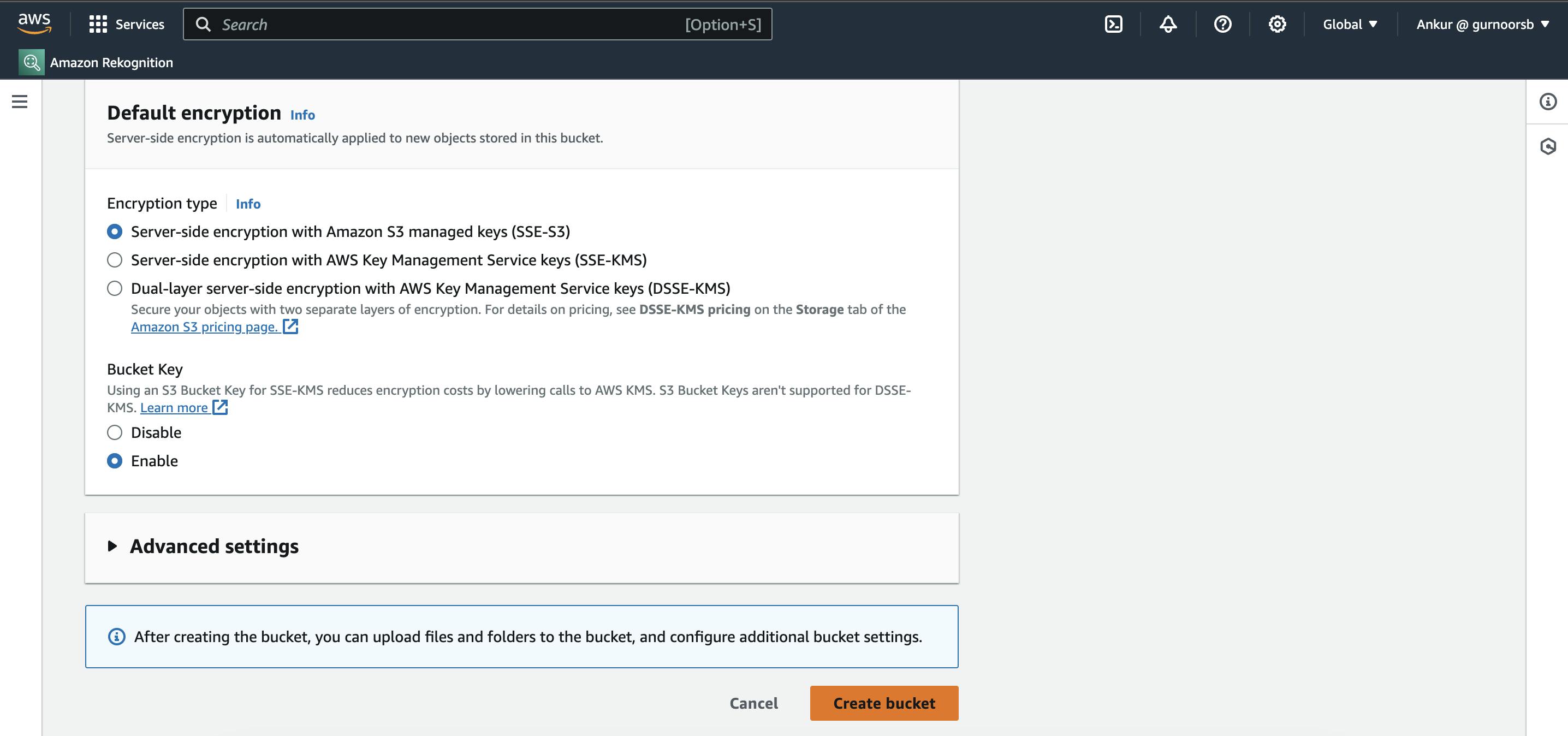

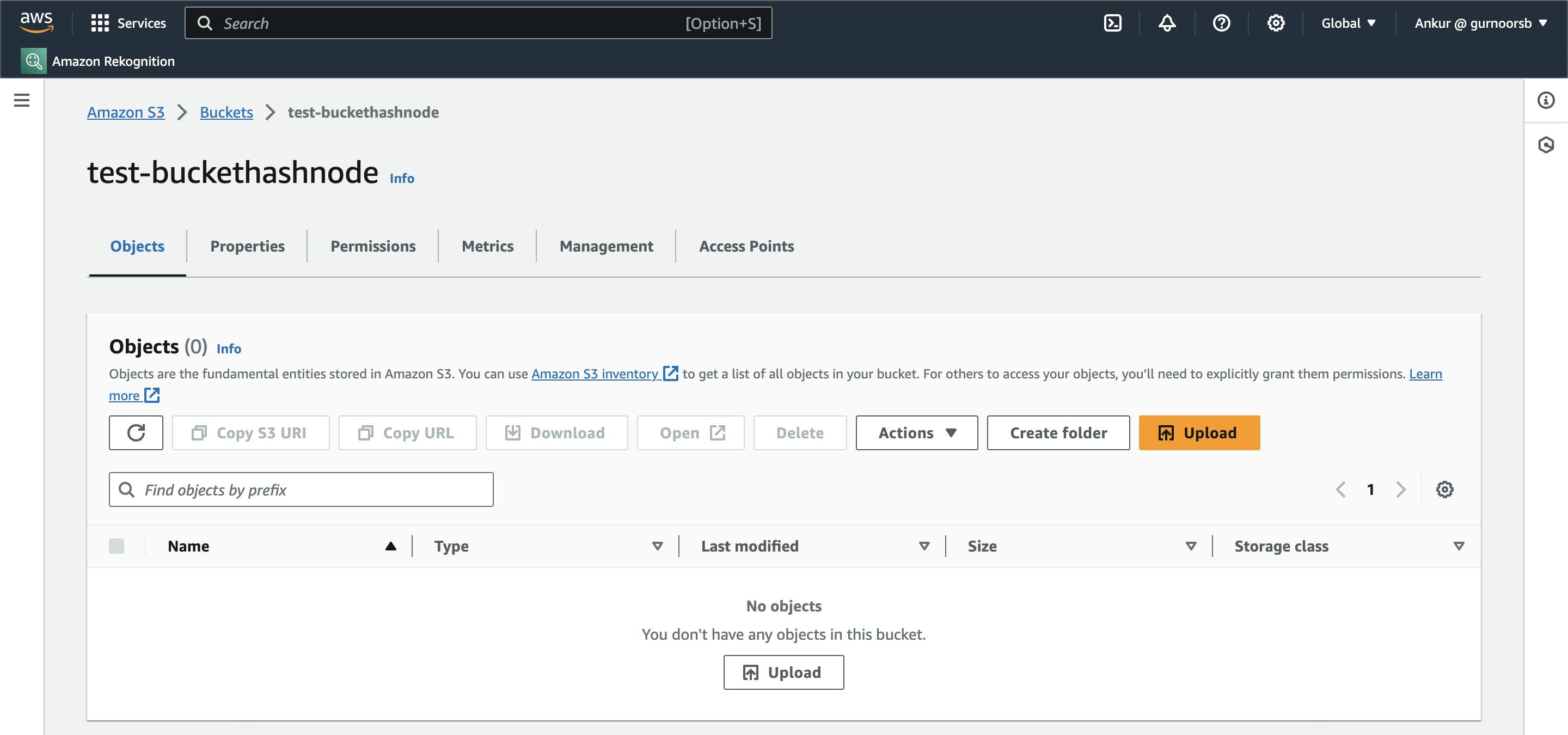

Create a Bucket and Specify settings & Enable Public Access

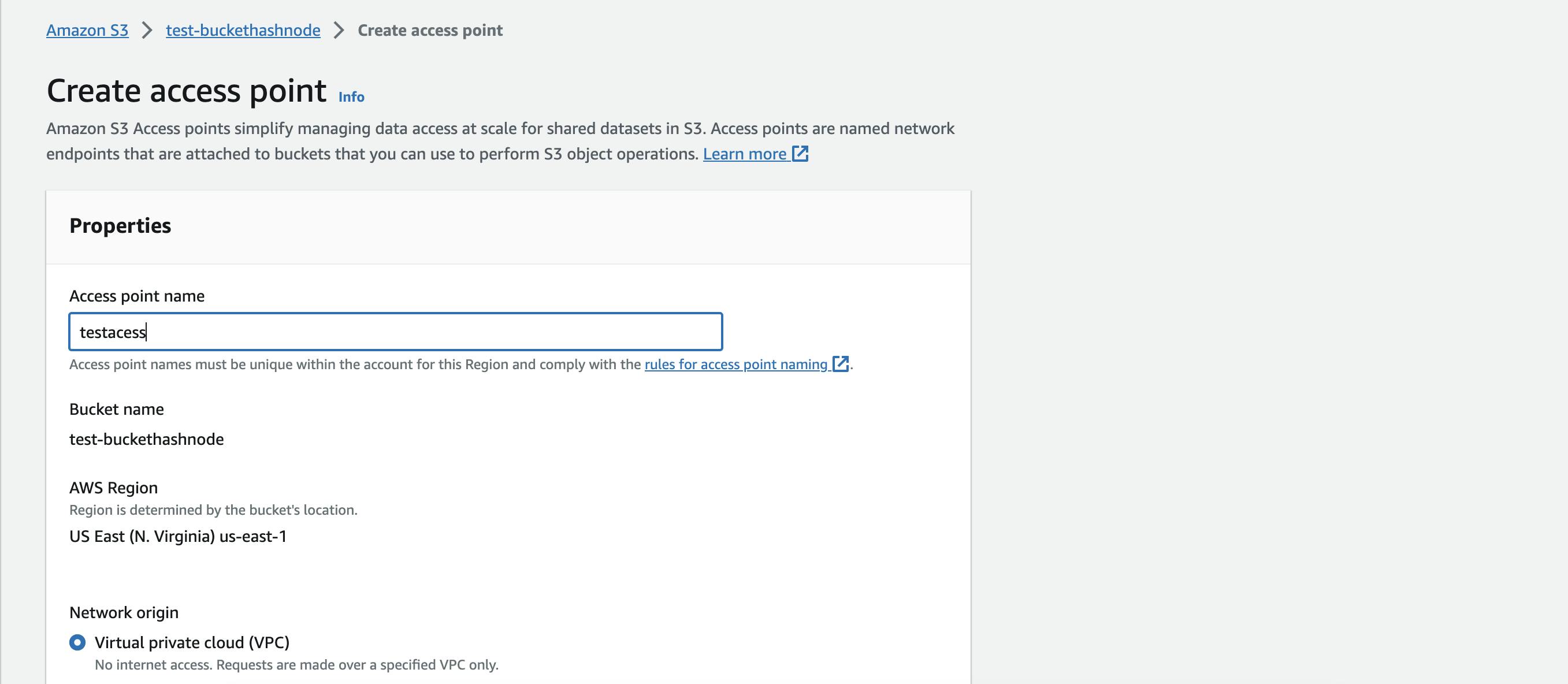

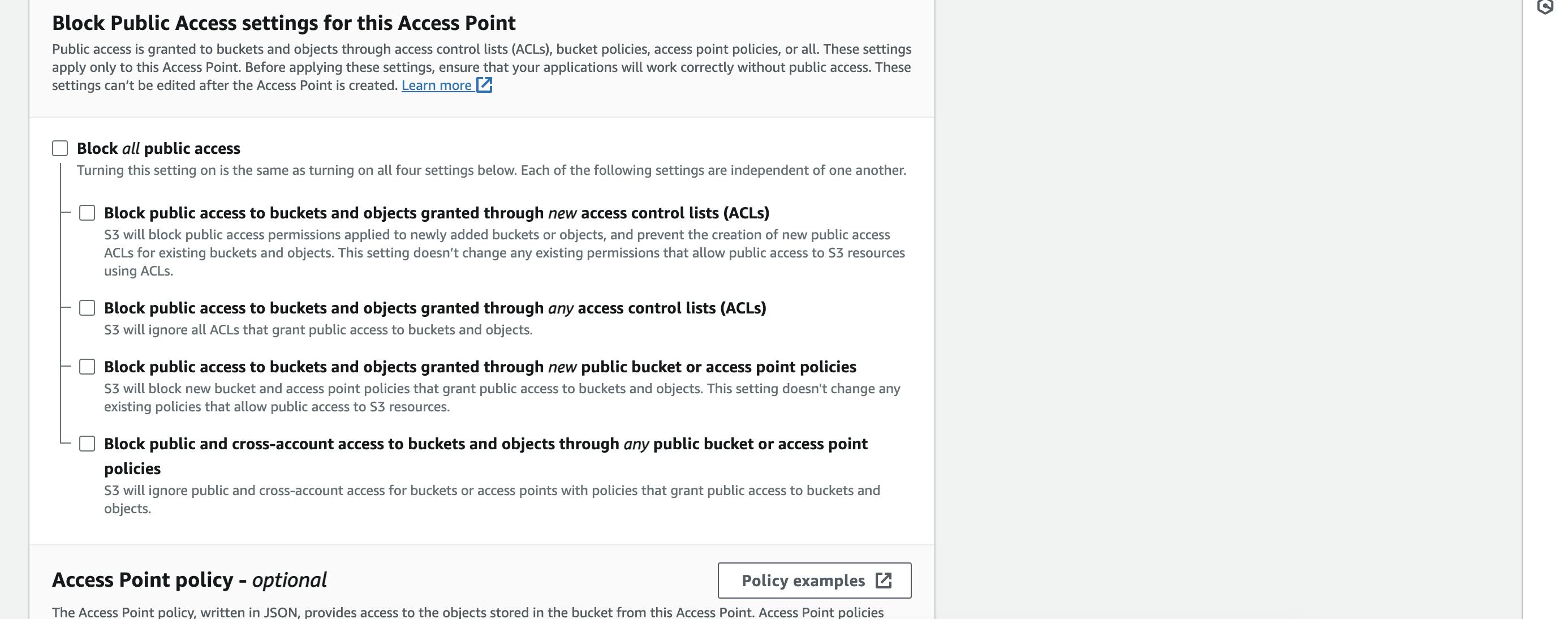

Create Access Points & Enable Public Access

Step 2 - Installing Libraries

python3 -m venv venvsource venv/bin/activatepip install boto3

Step 3 - Code

Libraries & Client

import boto3

import os

# os vars

os.environ['AWS_ACCESS_KEY_ID'] = 'ACCESS_KEY'

os.environ['AWS_SECRET_ACCESS_KEY'] = 'SECRET'

s3_client = boto3.client('s3')

bucket = 'test-buckethashnode'

Functions

Upload

def upload_file(file_name, bucket, object_name=None): if object_name is None: object_name = file_name try: s3_client = boto3.client('s3') response = s3_client.upload_file(file_name, bucket, object_name) print("File Uploaded") print(response) except Exception as e: print(e) return False return TrueParameters:

file_name: The local file path and name that you want to upload.bucket: The name of the S3 bucket where the file will be uploaded.object_name: (Optional) The key or path under which the file will be stored in the bucket. If not provided, it defaults to the local file name.

Boto3 Client Initialization:

- The

boto3.client('s3')statement initializes an S3 client from the Boto3 library, which is the official AWS SDK for Python. This client is used to interact with the S3 service.

Usage of Boto3 Library:

The

boto3.client('s3')statement and the subsequents3_client.upload_filemethod demonstrate the use of the Boto3 library for AWS S3 operations.Boto3 simplifies the interaction with AWS services and abstracts away many of the low-level details.

Delete

def delete_file(file_name, bucket): try: s3_client = boto3.client('s3') response = s3_client.delete_object(Bucket=bucket, Key=file_name) print("File Deleted") print(response) except Exception as e: print(e) return False return TrueParameters:

file_name: The key or path of the object within the S3 bucket that you want to delete.bucket: The name of the S3 bucket containing the file to be deleted.

Boto3 Client Initialization:

- The function starts by initializing an S3 client using

boto3.client('s3'). This client is crucial for interacting with the AWS S3 service.

Usage of Boto3 Library:

Similar to the

upload_filefunction, this function utilizes the Boto3 library for AWS S3 operations.The

boto3.client('s3')statement and the subsequents3_client.delete_objectmethod showcase the use of Boto3 to interact with the S3 service for file deletion.

Download

def download_file(file_name, bucket): try: s3_client = boto3.client('s3') response = s3_client.download_file(bucket, file_name, file_name) #File Link print("File Downloaded") file_link = s3_client.generate_presigned_url('get_object', Params={'Bucket': bucket, 'Key': file_name}, ExpiresIn=3600) print(file_link) except Exception as e: print(e) return False return TrueParameters:

file_name: The key or path of the object within the S3 bucket that you want to download.bucket: The name of the S3 bucket containing the file to be downloaded.

Usage of Boto3 Library:

This function, like the others, utilizes the Boto3 library for AWS S3 operations.

The

boto3.client('s3')statement, thes3_client.download_filemethod, and thes3_client.generate_presigned_urlmethod showcases the use of Boto3 to interact with the S3 service for file download.

Test Case

def main(): # upload file file_name = 'test.txt' bucket = 'test-buckethashnode' upload_file(file_name, bucket) download_file(file_name, bucket) if __name__ == '__main__': main()

Conclusion

In conclusion, the presented Python script showcases a streamlined approach to interacting with Amazon S3, a fundamental component of cloud-based data storage. Through functions dedicated to uploading, downloading, and deleting files, the script harnesses the power of the Boto3 library, providing a user-friendly interface for developers to seamlessly integrate AWS S3 operations into their projects. The utilization of exception handling ensures robust error management, enhancing the reliability of file management processes. As organizations increasingly rely on cloud services for scalable and durable storage solutions, mastering the capabilities of Amazon S3 becomes paramount. This script serves as a valuable resource for those seeking to navigate and incorporate essential S3 operations into their Python applications, contributing to the efficiency and reliability of data handling in the cloud.